I started running a cross-functional experiment board because I was tired of marketing “tests” that lived and died in Google Docs — promising ideas that never translated into pipeline. What changed everything was treating experiments like product development: clear hypotheses, shared ownership, measurable success criteria, and fast handoffs into the revenue engine. Over the past few years I’ve used this approach to reliably add $8–12k/month in pipeline from small, repeatable marketing experiments. Here’s the exact way I run the board so teams actually deliver outcomes instead of dashboard vanity metrics.

Why a cross-functional experiment board matters

Most marketing tests fail for operational reasons, not because the idea was bad. Common failure modes I’ve seen:

- Lack of alignment on the goal — is this awareness, leads, or revenue?

- No measurable success criteria — we track clicks but not pipeline contribution

- Ownership gaps — campaign built but nobody hands off leads to sales or ops

- Slow implementation — months between ideation and launch, losing context

A central experiment board fixes these by making experiments visible to growth, product, sales, and revenue ops, and by embedding guardrails that force measurable outcomes. The result: more tests launched, faster learning, and predictable pipeline uplift.

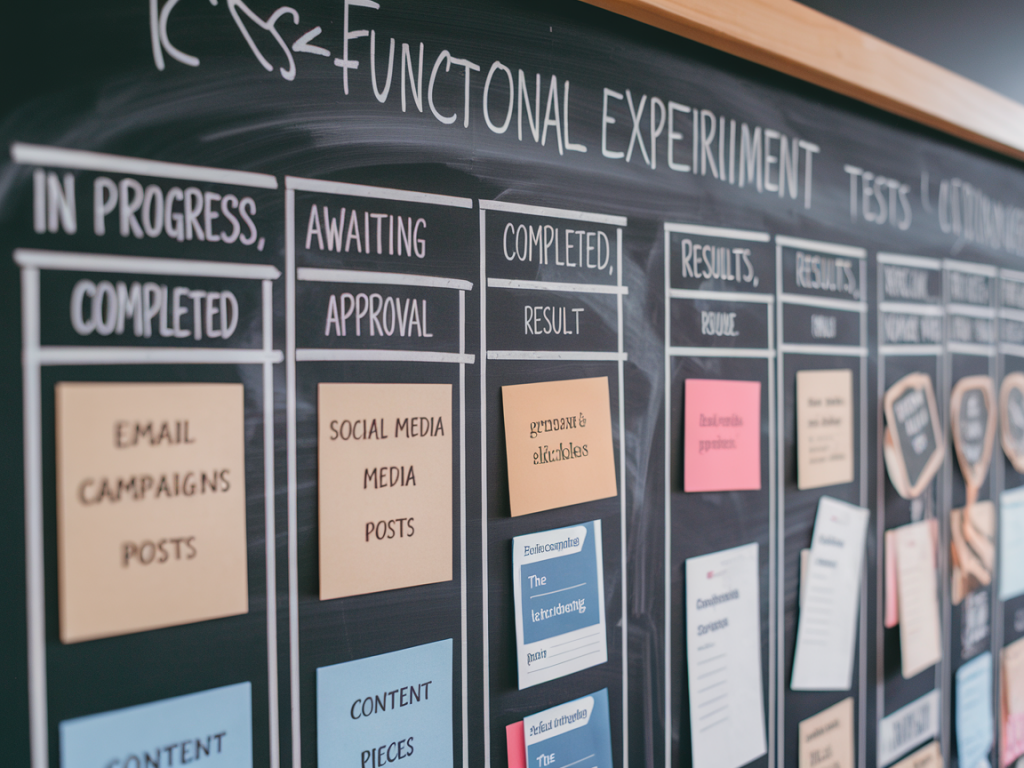

Core structure of the board (what I track)

I use a single table view (Notion or Airtable works great) with the following columns. You can copy this into your tool of choice — Jira, Monday, or a dedicated Growth OS like GrowthBook will also work.

| Column | Purpose |

|---|---|

| Experiment name | Short, descriptive title |

| Owner | Person accountable for delivery (not just idea) |

| Cross-functional partners | Design, product, sales, ops involved |

| Hypothesis | Clear “If we X, then Y, because Z” |

| Primary metric | What success looks like (pipeline, SQLs, MQLs) |

| Secondary metrics | Supporting signals (CTR, demo rate, CAC) |

| Estimated impact | Monthly pipeline $ (conservative and optimistic) |

| Priority score | Use RICE or ICE for prioritization |

| Status | Backlog, In progress, Live, Analyze, Complete |

| Start / End dates | Planned timeline |

| Handoff artifacts | Playbook for SDRs, UTM tagging, destination workflows |

How we define impact — the $10k/month math

We don’t guess pipeline. I ask: “If this experiment moves the needle, how much pipeline will it create per month?” Then we work backwards from realistic conversion rates:

- Leads -> SQL conversion: 20% (adjust by company)

- SQL -> Opportunity: 30%

- Average deal size: $20k

Example calculation for a $10k monthly pipeline target:

- Monthly pipeline / Avg deal size = number of opportunities needed: 10,000 / 20,000 = 0.5 opps

- Opps needed / SQL->Opp = 0.5 / 0.3 ≈ 1.7 SQLs

- SQLs needed / Lead->SQL = 1.7 / 0.2 ≈ 8.5 leads

So an experiment that reliably adds ~9 qualified leads a month is enough to justify it, given the conversion assumptions. Framing experiments with an explicit pipeline target forces us to prioritize things like lead quality, routing, and sales enablement — not just volume.

Scoring & prioritization: pick what moves pipeline fastest

I use a simplified RICE framework adapted for cross-functional work:

- Reach — how many leads/users will this touch in a month?

- Impact — projected uplift to primary metric (scaled 1–5)

- Confidence — data/precedent to support the idea (1–5)

- Effort — cross-functional cost in person-weeks (1–5, lower is better)

Score = (Reach * Impact * Confidence) / Effort. We run this score in the board and fund the top experiments each sprint cycle (usually monthly). This prevents execution teams from chasing low-impact vanity tests.

Cross-functional roles and responsibilities

Clarity in roles is where most teams trip up. My default setup:

- Experiment Owner (Marketing/Growth) — accountable for hypothesis, analytics, and reporting.

- Revenue Ops — ensures tracking, attribution, and CRM workflows are in place.

- Sales/SDR Rep — agrees on lead qualification and follows the handoff playbook.

- Designer/Developer/Product — builds landing pages, test variants, or product changes.

- Executive Sponsor — available to unblock cross-team issues and prioritize resources.

Every experiment must have an owner and at least one cross-functional partner. No exceptions.

Cadence and ceremonies

We run this board on a monthly cycle: ideation, build, launch, analyze. The meeting rhythm looks like this:

- Weekly 30-minute standup on active experiments (owner gives quick updates)

- Monthly prioritization session (1 hour) to score and commit experiments

- Launch readiness check 48 hours before deploy (tracking, UTMs, SDR playbook)

- Post-mortem within 10 business days of experiment end (what we learned + next steps)

Fast meetings, clear decisions. I refuse to let ideation sessions become velocity killers — if an idea passes the RICE score and has an owner, we build it.

Measurement & instrumentation: never run blind

Tracking is non-negotiable. Before any experiment goes live I require:

- UTM scheme deployed and mapped to CRM fields

- Event tracking in GA4 and Segment (or equivalent)

- Goal mapping in the dashboard (Looker, Metabase, or HubSpot reports)

- Attribution window and rules documented

If revenue ops can’t validate data within 48 hours post-launch, we pause communications until it’s fixed. I’ve lost too many wins to sloppy attribution.

Operational handoffs that protect pipeline

One small change that multiplied our pipeline: a one-page SDR playbook attached to every experiment card. It contains:

- Who qualifies the lead and how (BANT tailored)

- Suggested outreach sequence and messaging hooks

- Objections expected from the experiment variant

- CRM tags and disposition codes to use

When SDRs know exactly what to do, conversion rates go up. When they don’t, volume means nothing.

Examples of high-impact experiments that hit $10k+/month

- Webinar -> Sales Qualified Leads: Niche, product-focused webinars with reserved seats and a targeted SDR outreach sequence. We ran 3 per quarter and each added ~12 SQLs in month 1.

- Product usage email playbook: For freemium users, we A/B tested onboarding email copy + CTA that nudged to a demo. Small copy changes produced 30% uplift in demo requests, translating into >$10k pipeline/month.

- High-intent PPC landing page: Single-purpose landing pages with social proof + one-step calendar booking. After routing to a dedicated SDR queue, conversion quality improved and monthly pipeline climbed above $10k.

Reporting: what I present to leadership

Leadership wants a simple answer: did experiments move revenue? My monthly report includes:

- Top 3 experiments launched and their RICE scores

- Pipeline attributed this month (dollars) and key conversion rates

- Learnings and next bets

- Operational blockers and resource asks

This keeps the conversation focused on ROI and learning velocity, not clicks or impressions.

Common pitfalls and how I avoid them

Some lessons I learned the hard way:

- If you don’t lock down attribution before launch, the data is useless — I now gate experiments behind tracking checks.

- Too many experiments at once dilute ops — we limit to 3–5 active tests depending on team size.

- No post-mortem = repeated mistakes — every experiment has a short write-up with next-step decisions.

Run your experiment board like a product backlog: prioritize ruthlessly, ship quickly, measure honestly, and institutionalize the learning.