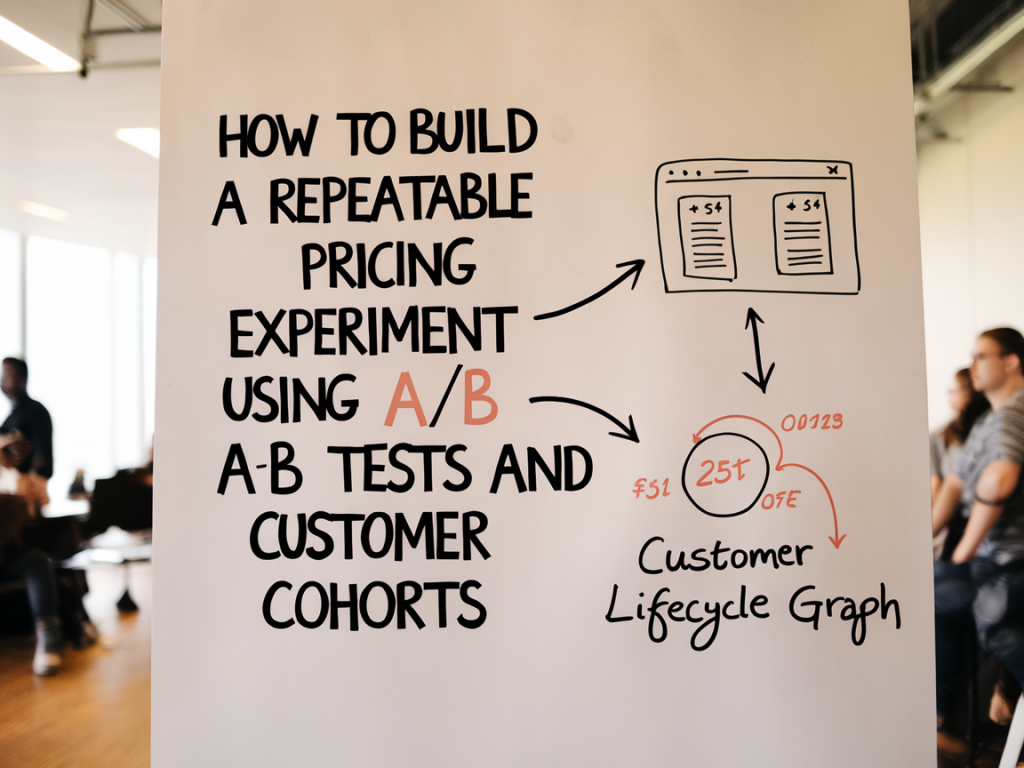

I run pricing experiments the way I run growth experiments: with a clear hypothesis, tight measurement, and a focus on repeatability. Over the past decade I’ve helped startups and mid-market companies test pricing moves that actually move revenue — not just vanity metrics. In this post I’ll walk you through a practical, repeatable framework for running A/B pricing tests combined with customer cohort analysis so you can learn faster and avoid costly mistakes.

Why combine A/B tests with cohort analysis?

A/B testing gives you clean causal evidence about the immediate impact of a price change on conversion or checkout behavior. Cohort analysis shows you how that change plays out over time — retention, churn, LTV, and revenue per cohort. Use A/B tests to validate a hypothesis, and cohort analysis to estimate the long-term economics. Run them together and you won’t be surprised by short-term wins that destroy unit economics a few months later.

Before you start: the questions you must answer

- What’s the objective? Are you optimizing for conversion, average revenue per user (ARPU), gross churn, or something else?

- What’s the hypothesis? Example: “A lower entry price will increase paid conversion by 30% without increasing 3‑month churn by more than 10%.”

- Which segments matter? New leads, trialers, existing customers, SMB vs enterprise — each can respond differently.

- What’s the minimum detectable effect (MDE)? If your business can’t detect a 5% uplift, don’t design for it.

Designing the experiment

Designing a repeatable pricing experiment is about balancing statistical power, operational safety and speed. Here’s a template I use.

- Define primary and secondary metrics. Primary example: paid conversion rate (trial → paid) or checkout conversion for freemium. Secondary examples: 7-day retention, 30-day gross churn, ARPU, signups.

- Pick cohorts and bucketing rules. Decide whether you’re splitting by visitor, account, or browser cookie. For B2B SaaS always bucket by account ID to avoid leakage across teammates.

- Sample size and duration. Use an A/B test calculator (Optimizely, Evan Miller’s calculator, or built-in calculators in experiment platforms) to compute sample size. If you need to measure retention, your duration must cover the retention window (e.g., 30 or 90 days).

- Reject/keep criteria. Predefine what results will make you roll back or roll forward the price. Example: trigger rollback if conversion drops by >10% with p < 0.05, or if 30-day revenue per user falls below target.

Practical setup: tools and instrumentation

Here’s what I typically wire up to run a robust pricing experiment:

- Experiment platform: Optimizely, VWO, or feature-flagging tools like LaunchDarkly or Split.io for server-side price variations. For checkout experiments I prefer server-side flags to prevent client-side flicker and ensure consistent billing behavior.

- Analytics: Mixpanel, Amplitude or Segment for event tracking, and BigQuery or Snowflake for cohort queries. I track events like view_price, add_to_cart, checkout_start, purchase, subscription_renewal, cancellation_request.

- Billing & revenue: Stripe or Recurly events should be synced to analytics so revenue and churn are tied to experiment cohorts.

- CRM/OPS: Ensure CS and billing teams know about the test. Tag accounts so support responses don’t bias user behavior (e.g., special discounts given retrospectively).

Example experiment flow

Here’s a simple flow I use for a new pricing tier experiment on a SaaS checkout:

- Create two variants: Control (current price) and Variant (new price or new feature bundle + price).

- Bucket users by account ID at the moment they first see the price page; persist that assignment in your database and pass it to analytics and billing.

- Track events: price_view, plan_selected, checkout_completed, trial_started, payment_success, renewal, cancellation.

- Collect at least N users per variant (per your sample-size calc) and run the test for the pre-defined duration. Continue tracking cohorts post-conversion for retention/LTV analysis.

Key metrics and how to read them

You need both immediate conversion metrics and cohort-derived lifecycle metrics:

- Immediate: view → checkout conversion, checkout → purchase conversion, revenue per checkout.

- Short-term (7–30 days): trial-to-paid conversion, short churn, expansion or downgrade events.

- Long-term (30–90+ days): gross churn, net dollar retention (NDR), cohort LTV.

Run these analyses by cohort month/week of acquisition or test exposure. A simple cohort table I use looks like this:

| Cohort (Week) | Variant | Users | Paid Conversion % | 30d Churn % | ARPU 30d |

|---|---|---|---|---|---|

| 2025-09-01 | Control | 1,200 | 6.2% | 12% | $28 |

| 2025-09-01 | Variant | 1,150 | 8.0% | 18% | $26 |

In the table above you can see a lift in conversion but worse churn — which suggests a lower price may be bringing less-qualified buyers. That’s exactly why cohort LTV matters.

Statistical significance vs business significance

Don’t stop at p-values. A statistically significant uplift of 2% might not be meaningful if your MDE is 10% and the revenue impact is neutral or negative when you factor churn. Conversely, an uplift that misses strict significance but shows consistent direction across cohorts and a reasonable effect size can be actionable. I recommend:

- Report confidence intervals and effect sizes, not just p-values.

- Use Bayesian or sequential testing if you need flexibility in stopping rules — but predefine priors and stopping boundaries.

- Always check heterogeneity: breaks down by segment (SMB vs Enterprise, geo, traffic source).

Common pitfalls and how I avoid them

- Leakage across accounts: Bucket at account level for B2B; bucket at user level for B2C but persist assignment across devices if possible.

- Discount contamination: If sales or CS give discounts, tag the account so you can exclude or analyze separately.

- Underpowered tests: Don’t launch tests that can’t detect business-relevant changes. Either increase traffic, raise your MDE, or run sequential tests focused on bigger changes.

- Short observation windows: Pricing affects retention. Measure 30–90 days depending on your billing cadence before calling it.

How to make the experiment repeatable

Repeatability comes from templates and automation:

- Create a pricing experiment template that includes hypothesis, metrics, sample-size calc, bucketing rules, instrumentation checklist and rollback criteria.

- Use feature flags and automation (CI/CD) so variants are implemented consistently across environments.

- Automate cohort reports in your BI (scheduled queries in BigQuery) so each new test produces the same set of tables and charts.

- Keep an experiments logbook with learnings and meta-data (start/end dates, traffic sources, known anomalies).

When I run experiments for clients I also save the cohort SQL and the exact billing event mapping — that single artifact prevents rework and ensures future experiments can be compared apples-to-apples.

Deciding to roll out or iterate

When the test ends, ask these questions before a rollout:

- Do the primary metrics meet your acceptance criteria?

- Does cohort LTV support the short-term gains?

- Are there segments that should keep or not keep the new price?

- Are there operational or legal constraints to a rollout (billing code, tax changes, support training)?

If the answers are mixed, run a focused follow-up: deeper segmentation, feature-bundling variations, or time-limited promotions to explore elasticity. If the answer is clear, plan a staged rollout — by geography or cohort — and continue monitoring.

Pricing experiments are some of the highest-leverage tests a business can run, but they require discipline. If you set up consistent bucketing, instrument revenue correctly, and pair A/B tests with cohort LTV analysis, you’ll avoid the classic trap of “looks good now, breaks later.” If you want, I can share my experiment template (hypothesis + checklist + SQL snippets) so you can plug it straight into your stack.